A Pegasystems' survey of 5000 consumers in countries including the United States, the United Kingdom, France, Germany, and Japan found many people aren't sold on the benefits of AI, but the company is aiming to address one of their main objections

There is widespread cynicism about companies, judging by the survey results. 68% of respondents said organisations have an obligation to do what is morally right for the customer, beyond what is legally required. But 65% don’t trust that companies have their best interests at heart. Consumers do not believe businesses actually care about them or show enough empathy for their individual situations.

Just 40% of respondents agreed that AI has the potential to improve the customer service of businesses they interact with, and only 30% felt comfortable with businesses using AI to interact with them. A mere 9% said they were ‘very comfortable’ with the idea. There was also concern about machines taking peoples jobs (mentioned a third of respondents), and 27% also cited the ‘rise of the robots and enslavement of humanity’ as a concern.

|

|

70% prefer to speak to a human than an AI system or a chatbot for customer service, and 69% are more inclined to tell the truth to a human than to an AI system. For life and death decisions, an overwhelming (if surprisingly small) 86% said they trust humans more than AI.

Only 12% agreed that AI can tell the difference between good and evil, and 56% customers don’t believe it is possible to develop machines that behave morally. Only 12% believe they have ever interacted with a machine that has shown empathy.

"Our study found that only 25% of consumers would trust a decision made by an AI system over that of a person regarding their qualification for a bank loan," said Pegasystems vice president of decisioning and analytics Rob Walker.

"Consumers likely prefer speaking to people because they have a greater degree of trust in them and believe it's possible to influence the decision, when that's far from the case. What's needed is the ability for AI systems to help companies make ethical decisions. To use the same example, in addition to a bank following regulatory processes before making an offer of a loan to an individual, it should also be able to determine whether or not it’s the right thing to do ethically.

"An important part of the evolution of artificial intelligence will be the addition of guidelines that put ethical considerations on top of machine learning. This will allow decisions to be made by AI systems within the context of customer engagement that would be seen as empathetic if made by a person.

"AI shouldn’t be the sole arbiter of empathy in any organisation and it’s not going to help customers to trust organizations overnight. However, by building a culture of empathy within a business, AI can be used as a powerful tool to help differentiate companies from their competition.”

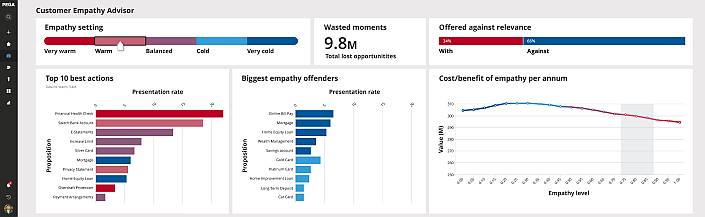

So Pegasystems is introducing its Customer Empathy Advisor to help its customers do just that.

This new capability of Pega Customer Decision Hub is intended to help companies use AI to build more sustainable customer relationships. Increasing the level of empathy in AI-assisted conversations is expected to build more trust, loyalty, and value with each customer.

Most AI just adds more spam to people’s lives, sacrificing long-term loyalty for a few short-term sales, the company believes. For example, just because AI could likely sell a high-interest loan to a low-income family doesn’t mean it should. Customer Empathy Advisor helps operationalise empathy at scale for the mutual benefit of both parties.

Pega Customer Decision Hub analyses customer data to guide customer-facing agents and virtual assistants to take the next best action with each customer. The new Customer Empathy Advisor feature takes this analysis one step deeper by examining how empathic these recommendations are and offers a more compassionate approach – which, contrary to popular belief, is often the most profitable strategy as well, the company asserted.

This is a three-step approach.

The first step is to analyse current strategies in terms of relevance, suitability, value, context, intent, mood, etc, all from the customer's perspective. Then engagement analysts can pinpoint which actions engender the most trust from customers and which repel them farther away from the brand. A simple intuitive slider lets stakeholders adjust empathy levels andS see the impact the change has on relevant KPIs.

Step two is predicting the return on empathy – for example, is the action, offer, or suggestion likely to increase the customer’s lifetime value? Will it likely cause customer churn or increase exposure to risk? Is it deliberately misselling a product or unable to explain it?

Finally, businesses can automatically deploy these strategies through Pega Infinity, applying empathy in all marketing, sales, and customer service interactions, both on and offline, even if that means taking no action. This works at the level of individual customers, not just segmented groups.

"Most businesses are conditioned to try and squeeze every last drop of profit from each customer. But this predatory mentality distorts the fact that practicing a little empathy is not only good for the customer, it's good for business," said Pegasystems vice president, decisioning and analytics Rob Walker.

"We've always believed that the only way to win a customer's heart is to first walk a mile in their shoes. Today we're taking a step closer in this pursuit by instilling empathy in customer interactions – which is ultimately the right way to do business for everyone."

Commonwealth Bank head of data and decisioning strategy, retail bank, Alex Burton told iTWire he found the idea interesting, and one that it "aligns well with the 'should we?' language going through CBA at the moment."

Not surprisingly, the bank has no specific plans at this stage for using the newly announced product.

The Customer Empathy Advisor will be available to all Pega Customer Decision Hub clients near the end of 2019.

Disclosure: The writer attended PegaWorld 2019 as a guest of the company.